Pegasus IV Cluster

Serial (non-MPI) jobs

|

Pegasus IV compute nodes and their attributes

|

Compiling your code

Two compilers suites are installed on the Pegasus IV Cluster:

- the standard GNU compilers gcc and gfortran

- Intel Parallel Studio XE 2016 for Linux which includes Intel C/C++ Compiler 16 and Intel Fortran Compiler 16

We suggest you use the Intel compilers, since they tend to produce somewhat faster executables, which is of significant importance if your simulations run days or even weeks to complete a calculation.

Intel Fortran Compiler compiles Fortran 77, Fortran 90, Fortran 95, and Fortran 2003 programs. It is invoked by typing

| ifort <file_name> |

This compiles file <file_name>, and if there are no errors, an executable with the name "a.out" is created. If you wish to test your program, simply type:

| ./a.out |

ifort has a few dozen different compiler switches that change its behavior. You may want to consult the man pages via

| man ifort |

or study the output of the command

| ifort -help |

which lists the possible switches. For example, if you wish the executable to have a name different from "a.out", use the switch "-o". The command

| ifort -o MyProgram test.f90 |

compiles the Fortran program in file "test.f90" and create an executable with the name "MyProgram". Pay particular attention to the optimization switches, since they can make you executables noticeable faster. Basic optimization is performed using the "-O" switch.The command

| ifort -O test.f90 |

compiles the Fortran program in file "test.f90" and optimizes the code for maximum speed.

Intel C compiler is invoked by typing:

| icc <file_name> |

and the C++ complier can be called via

| icpc <file_name> |

The basic compiler switches of the C and C++ compilers are similar to those of the Fortran compiler, but there are some differences. As an example, the command:

| icpc -O3 -mssse3 -o myprog mycode.cpp |

compiles the C++ program mycode.cpp using maximum level of optimization (-O3) and generates code for the Intel Core 2 processor family with SSSE3 (-mssse3). The executable will be called myprog.

Submitting a job

You are not supposed to run your code on the cluster server, and you are not supposed to log into the compute nodes directly. So, how to use the cluster?

The answer is the resource manager (a.k.a. batch system) Torque. In short, to obtain computational resources (such as a certain number of CPUs), you send your request to Torque. Torque puts your request into a waiting queue, and as soon the requested resources are available somewhere in the Pegasus IV cluster, your computation starts.

There are two types of Torque jobs, interactive jobs and batch jobs. To submit a request for an interactive job, simply type:

| qsub -I |

and as soon as a machine is available you'll get interactive access to it. For you as a user, it will look as if you are directly logged into a cluster node, i.e., you'll see a command prompt such as:

| [user@node004 ~]% |

provided Torque decided to give you compute node node004. Now you can use it at will. To end the interactive job simply type

| exit |

The interactive approach is useful mostly for test runs, especially if your simulation needs human input during execution.

The preferred way of using Pegasus IV cluster is via batch scripts. These are special shell scripts which tell Torque what to do. Torque will queue your job until the requested resources are available, execute the job, save the output data where you want them to be and even let you know when it finished. You just need to submit a script and from thereon Torque takes care of things.

Here is an example of a minimal Torque script:

|

#!/bin/tcsh #PBS -l nodes=1:ppn=1:quad,walltime=400:00:00 #PBS -m ae #PBS -M user@mst.edu #PBS -q qsNormal cd $PBS_O_WORKDIR ./a.out |

So, lets explain the script step by step. (Note that we assume that your Torque script and your executables are in the same directory, and that you'll submit the job from that directory).

- The first line means that we are using tcsh shell.

- Lines starting with #PBS contain commands for Torque. In the second line, we request 1 core (ppn=1) of 1 node (nodes=1) with attribute "quad" (see top of this page for a list of available nodes and their attributes). We also request 400 hours for job to complete. If the job has not finished within 400 hours it will be killed, sorry!

- In the third line, we tell Torque to send email if the job aborts or exits.

- The fourth line tells Torque to whom to send this email.

- The fifth line defines which queue should be used for queuing the job. At present, Pegasus IV cluster has only one execution queue called qsNormal.

- The remaining lines define what you want your job to do. In the example, we first change into the appropriate directory ($PBS_O_WORKDIR contains the full path to the submission directory). The next line calls the program a.out.

- Note: The last line of the script file needs to be blank!

Now you are ready to submit your first job. Save the above Torque script file into, say, pbs_test. Typing the command

| qsub pbs_test |

submits the job. To check the status of your job, type:

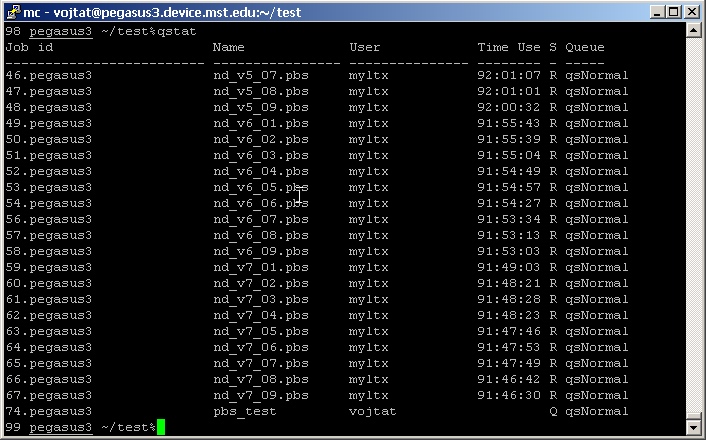

| qstat |

which prints a list of all jobs in the queue. A typical output could look like the following:

As the last line shows, Torque has queued your job, and it is waiting for execution.

If you need to delete a job, you can use the qdel command together with the job number. The command

| qdel 74 |

would delete job number 74.

Torque supports many more commands and options. A complete list can be found in the Torque Administrator Guide (pdf version).